Preface by Simon M. Mudd

Welcome to the documentation of the LSDTopoTools. This is, I am sure, obvious, but LSD stands for Land Surface Dynamics, and is named after Land Surface Dynamics research cluster in the School of GeoSciences at the University of Edinburgh.

The project started around 2010 due to my increasing frustration with my inability to reproduce topographic analyses that I found in papers and saw at conferences. Some of the papers that had irreproducible analyses were my own! Like many scientists working with topographic data, I was using a geographic information system (GIS) to prepare figures and analyze topography, and after a long session of clicking on commercial software to get just that right figure, I did not have a record of the steps I took to get there. Mea culpa. However, I do not think I am the only person guilty of doing this! I wanted a way of doing topographic analysis that did not involve a sequence of mouse clicks.

A second motivation came when my PhD student, Martin Hurst, finished his PhD and left Edinburgh for warmer pastures in England (he is now back in Scotland, where he belongs). His PhD included several novel analyses that were clearly very useful, but also built using the python functionality in a certain commercial GIS and not very portable. I and my other PhD students wanted to run Martin’s analyses on other landscapes, but this proved to be a painful process that required numerous emails and telephone calls between Martin and our group.

This motivated me to start writing my own software for dealing with topographic data. This seemed crazy at the time. Why were we trying to reinvent a GIS? The answer is that the resulting software, LSDTopoTools, IS NOT A GIS! It is a series of algorithms that are open-source and can be used to analyze topography, and the programs that run these analyses, which we call driver programs, are intended to be redistributed such that if you have the same topographic data as was used in the original analysis, you should be able to reproduce the analysis exactly. In addition the philosophy of my research group is that each of our publications will coincide with the release of the software used to generate the figures: we made the (often frightening) decision that there would be no hiding behind cherry-picked figures. (Of course, our figures in our papers are chosen to be good illustrations of some landscape property, but other researchers can always use our code to find the ugly examples as well).

We hope that others outside our group will find our tools useful, and this document will help users get our tools working on their systems. I do plead for patience: we have yet to involve anyone in the project that has any formal training in computer science of software engineering! But we do hope to distribute beyond the walls of the School of GeoScience at the University of Edinburgh, so please contact us for help, questions or suggestions.

Overview of the book

The purpose of this book is both to get you started using LSDTopoTools, and thus the early chapters contain both pre-requisite material and tutorials. The latter stages of the book are dedicated to using our driver functions (these are programs that are used to perform specific analyses). This latter part of the book focuses on research applications; we tend to write a series of driver functions for our publications which aim to each give some new geophysical, hydrological or ecological insight into the functioning of landscapes. Thus the latter half of the book is both long and not really structured like a textbook, and will expand as we conduct research. However, for those simply interested in learning how to get the code working and to perform some "routine" analyses the initial chapters are structured more like a book.

| By routine I mean something that is accepted by most professionals such as basin extraction or gradient calculations, and is not likely to be controversial. |

Chapter 1 goes into some more detail about the motivation behind the software, and involves a bit of commentary about open science. You are probably safe to skip that chapter if you do not like opinions.

Chapter 2 is an brief overview of the software you will need to get our software working on your computer, and how to actually get it installed. We also have appendices about that if you want further details.

Chapter 3 describes the preliminary steps you need to take with your topographic data in order to get it into our software. If you have read about or taken a course on GIS, this will be vaguely familiar. It will introduce GDAL, which we find to be much better than commercial software for common tasks such as projections, coordinate transformations and merging of data.

Chapter 4 explains how to get our software from its various Github repositories, and has some basic details about the structure of the software.

Chapters 5-6 are the tutorial component of the book, and have been used in courses at the University of Edinburgh.

*The chapters thereafter consist of documentation of our driver functions that have been used for research, many of which feature in published papers.

Appendix A gives more detail about required software to get our package running.

Appendix B explains how to get LSDTopoTools running on Windows. It contains a quite a bit of text about why you don’t really want to install our software on Windows, since installation is much more reliable, functional, and easy on Linux. Don’t worry if you don’t have a Linux computer! We will explain how to create a "virtual" Linux computer on your Windows computer. This description of creating a virtual Linux machine should also work for users of OS X.

Appendix C explains how to get LSDTopoTools running on Linux.

Appendix D explains how to get LSDTopoTools running on MacOS.

Appendix E has some more details on how the code is structured. If you are obsessive you could go one step further and look at the documentation of the source code.

Appendix F explains the different options in the analysis driver functions, which allow simple analyses driven by a single program.

Appendix G gives an overview of some of the open source visualisation tools and scripts we have developed for viewing the output of the topographic analyses, as well as other commonly used software.

Appendix H explains how to get the software running in parallel computing environments, such as on your multicore laptop, a cluster computer, or supercomputing facility. It also has tips on how generate scripts to run multiple analyses.

1. Introduction

1.1. What is this software?

LSDTopoTools is a software package designed to analyze landscapes for applications in geomorphology, hydrology, ecology and allied fields. It is not intended as a substitute for a GIS, but rather is designed to be a research and analysis tool that produces reproducible data. The motivations behind its development were:

-

To serve as a framework for implementing the latest developments in topographic analysis.

-

To serve as a framework for developing new topographic analysis techniques.

-

To serve as a framework for numerical modelling of landscapes (for hydrology, geomorphology and ecology).

-

To improve the speed and performance of topographic analysis versus other tools (e.g., commercial GIS software).

-

To enable reproducible topographic analysis in the research context.

The toolbox is organized around objects, which are used to store and manipulate specific kinds of data, and driver functions, which users write to interface with the objects.

For most readers of this documentation, you can exist in blissful ignorance of the implementation and simply stay on these pages to learn how to use the software for your topographic analysis needs.

1.2. Why don’t we just use ArcMap/QGIS? It has topographic analysis tools.

One of the things our group does as geomorphologists is try to understand the physics and evolution of the Earth’s surface by analyzing topography. Many geomorphologists will take some topographic data and perform a large number of steps to produce and original analysis. Our code is designed to automate such steps as well as make these steps reproducible. If you send another geomorphologist your code and data they should be able to exactly reproduce your analysis. This is not true of work done in ArcMap or other GIS systems. ArcMap and QGIS are good at many things! But they are not that great for analysis that can easily be reproduced by other groups. Our software was built to do the following:

-

LSDTopoTools automates things that would be slow in ArcMap or QGIS.

-

LSDTopoTools is designed to be reproducible: it does not depend on one individuals mouse clicks.

-

LSDTopoTools uses the latest fast algorithms so it is much faster than ArcMap or QGIS for many things (for example, flow routing).

-

LSDTopoTools has topographic analysis algorithms designed and coded by us or designed by someone else but coded by us soon after publication that are not available in ArcMap or QGIS.

-

LSDTopoTools contains some elements of landscape evolution models which cannot be done in ArcMap or QGIS.

1.3. Quickstart for those who don’t want to read the first 4 chapters

We have prepared LSDTopoTools to be used in a Virtual Machine so that you should just have to install two bits of software, VirtualBox and Vagrant. After that, you get a small file from one of our repositories that manages all the installation for you. More details are available in the section Installing LSDTopoTools using VirtualBox and Vagrant.

If you have your own Linux server and you like doing things by hand, here is succinct overview of what you need to do to prepare for your first analysis:

If all of the above steps make sense, you can probably just implement them and move on to the First Analysis chapter. Otherwise, you should continue reading from here.

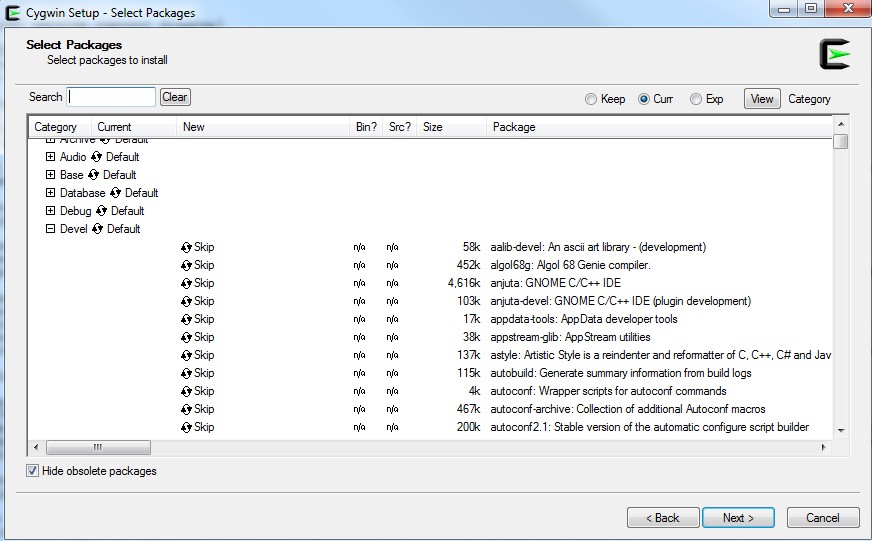

2. Required and useful software

| This chapter describes the software you need for LSDTopoTools, but you do not have to install everything yourself. This process is automated by a program called Vagrant. If you just want to get started, you can skip to these instructions: Installing LSDTopoTools using VirtualBox and Vagrant. You can also set up LSDTopoTools on Linux and MacOS using the Vagrant approach, see the chapter on Vagrant for details. |

LSDTopoTools is a collection of programs written in C++ that analyze topographic data, and can perform some modelling tasks such as fluvial incision, hillslope evolution and flood inundation. To run LSDTopoTools all that is really required is a functioning C++ compiler (which is a program that translates C++ code into 1s and 0s that your computer can understand), the make utility, and for specific components a few extra libraries. Most analyses will not need libraries.

As a standalone bit of software, LSDTopoTools does not require vast effort in installing the required software. However, if you want to look at the data produced by the software, or use some of our automation scripts, you will need to install additional packages on your system.

2.1. Essential software

| This isn’t our actual software! It is all the extra bits of software that you need to get working before you can use LSDTopoTools! |

This list goes slightly beyond getting the tools alone to run; it includes the software you need to get recent versions of the software and to visualize the output.

| Software | Notes |

|---|---|

A decent text editor |

You will need a reasonable text editor. One that has a consitent environment across operating systems and is open-source is Brackets. |

Version control software that you can use to grab working versions of our code from Github. Automatically installed using our vagrantfiles. |

|

A C++ compiler |

For compiling our software. We use GNU compiler g++. Note that we don’t call this directly, but rather call it via the make utility. Automatically installed using our vagrantfiles. |

The make utility: used to compile the code from makefiles. Automatically installed using our vagrantfiles. |

|

Various C++ libraries |

For basic analysis, no libraries are needed. For more specialized analysis, the libraries FFTW, Boost, MTL and PCL are required. See below for more information. FFTW is automatically installed using our vagrantfiles. |

We use python for both automation and vizualisation (via matplotlib). You should install this on your native operating system (i.e., not on the Vagrant server). |

|

We use the GDAL utilities to prepare our datasets, e.g. to transform them into appropriate coordinate systems and into the correct formats. Automatically installed using our vagrantfiles. |

2.1.1. A decent text editor

2.1.2. Git

Git is version control software. Version control software helps you keep track of changes to your scripts, notes, papers, etc. It also facilitates communication and collaboration through the online communities github and bitbucket.

We post updated versions of our software to the Github site https://github.com/LSDtopotools. We also post version of the software used in publications on the CSDMS github site: https://github.com/csdms.

It is possible to simply download the software from these sites but if you want to keep track of our updates or modify the software it will be better if you have git installed on your computer.

2.1.3. A compiler and other tools associated with the source code

You will need a compiler to build the software, as it is written in c++. In addition you will need a few tools to go along with the compiler. If you use our Vagrant setup these are installed for you. The things you really need are:

-

A C++ compiler. We use the GNU compiler g++.

-

The

makeutility. Most of the code is compiled by calling g++ from this utility.

In addition the TNT library is required, but this doesn’t require installation and we package it with our software releases. If you are wondering what it is when you download our software, it is used to do linear algebra and handle matrices.

In addition, there are a few isolated bits of the code that need these other components. Most users will not need them, but for complete functionality they are required. First, some of our makefiles include flags for profiling and debugging. We try to remove these before we release the code on Github, but every now and then one sneaks through and the code won’t compile if you don’t have a debugger or profiler. It might save you some confusion down the line if you install:

-

The

gdbutility. This is the gnu debugger. -

The

gprofutility. This allows you to see what parts of the code are taking up the most computational time.

Next, there are a few specialized tools that are only required by some of our more advanced components.

Requirements for LSDRasterSpectral

Some of our tools include spectral analysis, and to do spectral analysis you need the Fast Fourier Transform Library. This is included in the vagrant distribution.

In the source code, you will find #include statements for these libraries,

and corresponding library flags in the makefile: -lfftw3.

In the RasterSpectral source files,

we assume that you will have a fast fourier transform folder in your top level LSDTopoTools directory.

If that paragraph doesn’t make any sense to you, don’t worry.

We will go into more detail about the spectral tools within the specific chapters dedicated to those tools.

You can download FFTWv3 here: http://www.fftw.org/download.html, but if you use our Vagrant setup FFTW is installed for you.

Requirements for LSDRasterModel

Embedded within LSDTopoTools is a landscape evolution model. The model requires the Fast Fourier Transform Library (see above).

In addition it requires some numerical libraries:

-

Boost, a popular C++ library.

-

MTL is a library for working with sparse matrices, which are required for solving some of the equations in the landscape evolution model. You will need MTL 4. You don’t have to install anything for this, but

Boostneeds to be installed and this library goes in theboost/numeric/mtlsubdirectory.

Requirements for swaths and point clouds

Okay, now things get a little more complicated because you want to use the Swath Profile tools or the LSDCloudBase object (which handles point clouds). These objects are dependent on a set of libraries used for analyzing point cloud data, namely:

-

The

cmakeutility. This is likemakebut is required for our tools that examine point clouds, since it is required by something called the point cloud library. -

pcl: The Point Cloud Library.

-

libLAS: a library for working with LAS format data.

Unfortunately these are a bit time consuming to install, because they depend on all sorts of other bits of software that must be installed first. You should see the appendices for details on how to install this software.

2.1.4. GDAL

The Geospatial Data Abstraction Library has fantastic tools for preparing your data. It performs operations like clipping data, patching data together, resampling data, reprojecting data and doing coordinate transformations. If you don’t know what those things are, don’t worry, we explain these things in the preliminary steps chapter.

You can install all of GDAL if you want, but really you will only need their utilities.

This is included in the vagrant distribution.

2.1.5. Python

Python is a programming language used by many scientists to visualize data and crunch numbers. It is NOT included in our Vagrant setup and our python scripts will not work in the vagrant server (because they make figures, and the server does not come with a windowing system). Therefore, you need to intall python on your host operating system. We use it for visualization, and also for automating a number of tasks associated with topographic analysis.

Instructions on how to install python are in this section: Getting python running.

You will need:

-

The python programming language

-

Scipy, for scientific python. It includes lots of useful packages like

-

Numpy for fast numerics.

-

Matplotlib for plotting.

-

Pandas for data analysis.

-

-

GDAL For geospatial data processing.

-

-

If you want to run python with a nice looking environment, you should install syder.

2.2. Nonessential software

There are a number of software packages that are not required to run LSDTopoTools, but that you might find useful.

First, many people use geographic information software (GIS) to visualize data. If you work at a university or a private company, you might have a license to ArcGIS, a popular commercial GIS. However, if you are not part of a large institution or your institutional license does not allow home use, it can be convenient to have an open source alternative. In addition, if you want to edit our documentation or make your own fork for notes, you might consider using the same tools we do, which require the Ruby programming language.

2.2.1. An open source GIS: QGIS

The industry standard GIS is ArcGIS, and if you are at a university you might have a site license for this software. It is not so easy to get on a personal computer, however, so there are a number of open source options that can be used as an alternative.

One alternative, and the one that will be used in these tutorials, is QGIS.

If you are familiar with ArcMap, you should be able to become proficient at QGIS in a few days. In my experience, it also has the advantage of being more stable (i.e., it crashes less) than ArcMap.

One thing that is quite nice about QGIS is the number of plugins that are available.

You should download and install QGIS from their website, and click on the `Plugins tab to get some plugins.

the OpenLayers plugin, which allows you to quickly load

satellite and map information from all sorts of vendors.

2.2.2. Documentation using asciidoctor

This book, and various other notes and websites associated with the LSDTopoTools project, have been built using something called asciidoctor. Asciidoctor is used to produce cross-linked documents and documentation, and has been designed to simplify the tool chain that takes one from writing technical documentation to producing a book rather simple. You can read about its rationale here: http://asciidoctor.org/docs/what-is-asciidoc/. The software has worked well for us.

If you want to get asciidoctor working, you will need to get some packages working in Ruby. The instructions can be found in the appendices.

2.3. Installing LSDTopoTools using VirtualBox and Vagrant

These instructions will be similar for MacOS and Linux, the only real difference is that MacOS and Linux will have native ssh utilities and so you will not need putty.exe.

|

There are a number of ways to get LSDTopoTools working on your computer, of varying difficulty.

-

Get LSDTopoTools working natively in Windows or MacOS. This is possible, but very painful.

-

Get it working in a full Linux operating system via virtual machine software, such as virtualbox. Note that you can do this in Windows, Linux or MacOS operating systems. This is less painful and more reliable than option #1, but still painful.

-

Get it working on a locally hosted Linux server using virtualbox and vagrant. Again, you can do this on any common operating system.

Be afraid of option #1. Be very afraid. Option #2 is reliable (you can see how to do it in the appendix) but it means you will need to install all the necessary software yourself, which can take several hours. Option #3, involving Vagrant, is largely automated. It will still take some time the first time you boot your vagrant virtual machine, since a bunch of software will be installed, but we do automate this process for you.

2.3.1. First steps: Starting a Vagrant box

| You will need sufficient space on your hard disk to host a guest operating system. You also need room for the LSDTopoTools dependencies. You will struggle if you have less than 5Gb free. |

Vagrant is software that automates the creation and provisioning of virtual machines. What does that mean? It means that you will create a Linux server that runs inside of your day-to-day computer. This server will run even if you are using a different operating system (e.g., Windows). Vagrant machines can be configured using a vagrantfile, so you download our vagrantfile and you simply point vagrant to it and should get a working server that can run LSDTopoTools.

-

You need software for running virtual machines. We recommend virtualbox since it is both well supported and free. Download and install. Our instructions assume you are using virtual box.

-

Download and install Vagrant.

-

Vagrant works via command line, so you will need to know how to open a terminal on OS X, Linux (usually you can open one using

ctrl-alt-T, but if you use Linux that means you were born knowing how to open a terminal), or a Windows powershell. -

If you are working on Windows, you will probably have to restart after installing Vagrant so that Windows can register the path to Vagrant.

-

Okay, we now assume you have installed everything and are in a terminal or powershell. You need to make a directory where you keep information about your vagrant boxes. I made a folder names

vagrantboxesand then subfolders for different boxes. -

If you are in Windows, you will need an ssh utility to communicate with your vagrant box. You should download

putty.exefrom the putty website. In Linux and MacOS ssh utilities are already installed. -

Now you should fetch one of our vagrantfiles from our git repo: https://github.com/LSDtopotools/LSDTT_vagrantfiles

-

Rename the vagrantfile from the repo (either

Vagrantfile_32bit_FFTWorVagrantfile_64bit_FFTW) simplyvagrantfile -

If you use our vagrant files, you will need to make a directory

LSDTopoToolsin the same directory as your folders for different vagrant boxes. For example, you might make a directoryC:\VagrantBoxes\, and in that directory you can put bothLSDTopoToolsandUbuntu32_FFTW(or some such name) directories. You will put the vagrant file in theUbuntu32_FFTWdirectory. Your tree might look a bit like this:C:\vagrantboxes\ |--Ubuntu32_FFTW |-- vagrantfile |--Ubuntu64_FFTW |-- vagrantfile |--LSDTopoToolsIt is ESSENTIAL that the LSDTopoTools folder is present and is one directory level lower than the vagrant file. If this is not true, the vagrant machine will NOT WORK. In the above file structures the vagrantfiles have been renamed from the vagrant files in our repository. -

Go into the folder with the operating system you want (e.g.

Ubuntu32_FFTW):PS: > cd C:\VagrantBoxes PS: > cd C:\Ubuntu32_FFTW -

Now start your vagrant box (this might take some time since it has to fetch stuff from the internet):

PS: > vagrant upYou do not need to download a "base box" (that is a Linux operating system, in this case 32 bit Ubuntu) before you run vagrant up: Vagrant does this for you. However if you are runningvagrant upfor the first time Vagrant will download the box for you which will take some time (it is ~400Mb). You will only need to download the base box once. -

Congratulations! You now have a functioning Vagrant box!! Now you need to log on to the box.

If you want to update the base box you can use vagrant box updatecommand from the powershell or terminal windows.

2.3.2. Logging on to your Vagrant box

-

All right! Your Vagrant box is running. Other than a sense of vague accomplishment, this doesn’t really help you run LSDTopoTools. You need to log on to the box. You will operate your vagrant box as a server: you log into the machine and run code on it, but you won’t have pretty windows to look at. You will run everything through an ssh terminal, using a command line interface.

-

We do this using ssh.

-

If you are starting from a Linux or OSX machine, an ssh client is built into your command prompt and you can just type

vagrant sshinto the command prompt. -

If you are on Windows, you need to download putty.exe and run it.

-

In putty, set the host to 127.0.0.1 and the port to 2222. These are vagrant’s default settings.

-

You will need to add the RSA key to your cache (just say yes: remember you are not connecting to the internet where baddies can spy on you but rather a server running on your own computer).

-

Now you need to log in. Your vagrant box has a username of vagrant and a password of vagrant.

2.3.3. Your Vagrant box and file syncing

-

So you are logged in. Now what? It turns out Vagrant has done some clever things with your files.

-

Vagrant can sync folders across your Vagrant box and your host computer (that is, the computer you started vagrant from).

-

When you log in to your vagrant box, you will not be in the same folder where I have built the LSDTopoTools file structures. You need to navigate down to this:

$ pwd /STUFF $ cd .. $ cd .. $ pwd /STUFF $ cd LSDTopoTools $ ls STUFFYou can also jump directly there:

$ cd /LSDTopoTools

+ . As you can see above, the LSDTopoTools folder contains folders for different LSDTopoTools packages, for topographic datasets.

+ . Here is the amazing thing: the files that are in LSDTopoTools folder in your vagrant box ARE ALSO visible, and synced, in your host computer. So if you use LSDTopoTools to do some analysis within your vagrant box, you will be able to see the files within your host computer as well. This means that you can, for example, do a Linux based LSDTopoTools analysis and then plot that analysis in a GIS on your host windows box without having to transfer files. Not only that, but you can modify the code, update python scripts, change parameter files, etc., with your favourite text editor in Windows (or OSX, or whatever) and those files will be visible to your Vagrant box. Fantastic!

2.3.4. Updating to the latest versions of the software

To check out the latest version of our software you can run the vagrant provision command

PS: > vagrant up

PS: > vagrant provision2.3.5. Shutting things down

When you are finished with your session, you just need to go into the powershell or a terminal and type:

PS: > vagrant halt2.3.6. If you want to start from scratch

If you want to remove the virtual machine, start it up and than run vagrant destroy:

PS: > vagrant up

PS: > vagrant destroy2.3.7. Brief notes for setting up your own Vagrant server

| This section is for customising your vagrant environment (or rather, your Ubuntu environment that vagrant sets up for you) and can be safely ignored by 95% of LSDTopoTools users. We include the below notes for obsessive hackers who have nothing better to do. |

We have written Vagrant files for you so you don’t have to customise your working environment, but if you want to set up your own Vagrant boxes with your own software here are some notes.

Initiating a Vagrant box

-

Go into an empty folder to start a new Vagrant box.

-

Initiate Vagrant with:

PS> C:\> vagrant initAlternatively you can initiate with a

base box. In this example we use the Ubuntu precise 32 base box:PS> C:\vagrant init ubuntu/precise32 -

This command (

init) will simply make a vagrant file. To get the server up and running you need toupit. Before you do that you probably want to modify the vagrant file. -

One of the things you probably need to modify is the memory assigned to your guest vagrant box. In the vagrant file you should have:

config.vm.provider "virtualbox" do |vb| # Customize the amount of memory on the VM: vb.memory = "3000" endThe default memory is something small, and the problem with it is that it will take the guest operating system too long to boot, and vagrant will time out. I would give the vagrant box 3-4 Gb of memory.

-

Now you can

upyour vagrant box. In the folder with the vagrant file, type:PS> vagrant up -

If this is the first time booting the linux machine, this will take a while.

Notes on the base box

Vagrant sets up a Linux server living in your computer (it is called the Host computer). The server will run a Linux operating system, and you need to choose a functioning base system

vagrant base boxes. Here we have started with ubuntu/precise32. You might want to try other base boxes, they can be found at the atlas website.

| If you choose a base box that you do not already have (and you start with none), vagrant will download it. They are big!! Usually over 500Mb (each is a fully operational linux operating system). You will either need a fast internet connection or a lot of time. Make sure you also have enough room on your hard disk. |

You do need to be careful with base boxes!

| Not all base boxes work! On many windows machines, you can only run a 32 bit version of linux, even though you are almost certainly running 64 bit windows. You can change this by going into your BIOS and changing the settings, but that is dangerous and if you do not know what your BIOS is do not even think about attempting to change these settings. |

In testing, I found many bases boxes did not work at all. The one that worked well for me was the ubuntu/precise32 box. You can get this started with:

Alternatively you can just vagrant init and empty vagrant instance and change the box in the vagrantfile with config.vm.box = "ubuntu/precise32".

You can update your base box with the command vagrant box update.

Details of provisioning

If you change your vagrantfile with the box still running, you can run the new provisioning with:

PS> vagrant provisionIf you have downloaded our vagrant files, the provisioning of your virtual server should be automatic. However, you may wish to know what is happening during the provisioning, so here are some notes.

To install software, we use the shell provisioning system of vagrant. This should go into the vagrantfile and will look a bit like this:

config.vm.provision "shell", inline: <<-SHELL

sudo apt-get update

sudo apt-get install -y git

SHELLIn the above shell command, we are installing git. The -y flag is important since apt-get will ask if you actually want to download the software and if you do not tell it -y from the shell script it will just abort the installation.

You sync folders like this:

config.vm.synced_folder "../LSDTopoTools", "/LSDTopoTools"Were the first folder is the folder on the host machine and the second is the folder on the Vagrant box.

2.4. Getting python running

A number of our extensions and visualisation scripts are written in Python. To get these working you need to install various packages in Python.

| If you are using Vagrant to set up LSDTopoTools in a Virtual Machine, we recommend installing Python using Miniconda on your host machine, rather than installing within your Virtual Linux box. |

2.4.1. First option: use Miniconda (works on all operating systems)

We have found the best way to install python is miniconda. We will use Python 2.7, so use the Python 2.7 installer.

| If you install Python 3.5 instead of 2.7 GDAL will not work. |

Once you have installed that, you can go into a powershell or terminal window and get the other stuff you need:

$ conda install scipy

$ conda install matplotlib

$ conda install pandas

$ conda install gdal

$ conda install spyderThe only difference in Windows is that your prompt in powershell will say PS>.

| Spyder will not work on our vagrant server, so you need to install this on your host computer. |

To run spyder you just type spyder at the command line.

| Spyder needs an older version of a package called PyQt. If spyder doesn’t start correctly, run: |

$ conda install pyqt=4.10 -f2.4.2. Getting python running on Linux (and this should also work for OSX) NOT using miniconda

If you don’t want to use miniconda, it is quite straightforward to install these on a Linux or OSX system:

$ sudo apt-get install python2.7

$ sudo apt-get install python-pipor

$ yum install python2.7

$ yum install python-pipIn OSX, you need a package manager such as Homebrew, and you can follow similar steps (but why not use miniconda?).

After that, you need:

-

Scipy for numerics.

-

Numpy for numerics.

-

Matplotlib for visualisation.

-

Pandas for working with data.

-

GDAL python tools for working with geographic data.

-

Spyder for having a working environment. This last one is not required but useful if you are used to Matlab.

You can get all this with a combination of pip and sudo, yum or homebrew, depending on your operating system.

For example, with an Ubuntu system, you can use:

$ sudo apt-get install python-numpy python-scipy python-matplotlib python-pandas

$ sudo apt-get install spyderThe GDAL python tools are a bit harder to install; see here: https://pypi.python.org/pypi/GDAL/.

2.5. Setting up your file system and keeping it clean ESSENTIAL READING IF YOU ARE NEW TO LINUX

The past decade has seen rapid advances in user interfaces, the result of which is that you don’t really have to know anything about how computers work to use modern computing devices. I am afraid nobody associated with this software project is employed to write a slick user interface. In addition, we don’t want a slick user interface since sliding and tapping on a touchscreen to get what you want does not enhance repoducible data analysis. So I am very sorry to say that to use this software you will need to know something about computers.

If you are not familiar with Linux or directory structures this is essential reading.

2.5.1. Files and directories

Everything in a computer is organised into files. These are collections of numbers and/or text that contain information and sometimes programs. These files need to be put somewhere so they are organised into directories which can be nested: i.e., a directory can be inside of another directory. Linux users are born with this knowledge, but users of more intuitive devices and operating systems are not.

Our software is distributed as source code. These are files with all the instructions for the programs. They are not the programs themselves! Most of the instructions live in objects that have both a cpp and hpp file. They are stored in a directory together. Contained alongside these files are a few other directory. There is always a TNT folder for storing some files for matrix and vector operations. There will always be another directory with some variant of the word driver in it. Then there might be some other directories. Do not move these files around! Their location relative to each other is important!!.

Inside the folder that has driver in the name there will be yet more cpp files. There will also be files with the extension make. These make files are instructions to change the source code into a program that you can run. The make file assumes that files are in specific directories relative to each other so it is important not to move the relative locations of the cpp files and the various directories such as the TNT directory. If you move these files the you will not be able to make the programs.

We tend to keep data separate from the programs. So you should make a different set of folders for your raw data and for the outputs of our programs.

| DO NOT put any spaces in the names of your files or directories. Frequently LSDTopoTools asks for paths and filenames, and tells them apart with spaces. If your filename or directory has a space in it, LSDTopoTools will think the name has ended in the middle and you will get an error. |

2.5.2. Directories in Vagrant

If you use our vagrantfiles then you will need some specific directories. Our instructions assume this directory structure so if you have your own Linux box and you want to copy and paste things from our instructions then you should replicate this directory structure.

| If you use vagrant there will be directories in your host machine (i.e., your computer) and on the client machine (i.e., the Linux virtual machine that lives inside of you host machine). The file systems of these two computers are synced so if you change a file in one you will change the file in the other! |

Vagrant directories in windows

In windows you should have a directory called something like VagrantBoxes. Inside this directory you need directories to store the vagrantfiles, and a directory called LSDTopoTools. The directories need to look like this

|-VagrantFiles

|-LSDTopoTools

|-Ubuntu32_FFTW

| vagrantfile

|-Ubuntu32

| vagrantfile

The names VagrantBoxes and Ubuntu32, Ubuntu32_FFTW don’t really matter; you just need to have a place to contain all your files associated with vagrant and within this subdirectories for vagrantfiles. If your vagrantfile is for and Ubuntu 32 bit system that includes FFTW then you might call the folder Ubuntu32_FFTW.

|

There MUST be an LSDTopoTools directory one level above your vagrantfile. This directory name is case sensitive.

|

When you make the LSDTopoTools directory it will initially be empty. When you run vagrant up it will fill up with stuff (see below).

Vagrant directories in the client Linux machine

If you use our vagrantfiles to set up LSDTopoTools, they will construct a file system. It will have a directory LSDTopoTools in the root directory and within that directory will be two directories called Git_projects and Topographic_projects. The file system looks like this:

|-LSDTopoTools

|-Git_projects

|-LSDTopoTools_AnalysisDriver

|- lots and lots of files and some directories

|-LSDTopoTools_ChannelExtraction

|- lots and lots of files and some directories

|-More directories that hold source code

|-Topographic_projects

|-Test_data

|- Some topographic datasetsThese files are in the root directory so you can get to the by just using cd \ and then the name of the folder:

$ cd /LSDTopoTools/Git_projectsHere is the clever bit: after you have run vagrant up these folders will also appear in your host system. But they will be in your VagrantBoxes folder. So in Linux the path to the git projects folder is /LSDTopoTools/Git_projects and in Windows the path will be something like C:\VagrantBoxes\LSDTopoTools\Git_projects.

2.5.3. Difference between the source code and the programs

You can download our various packages using git, but many of them will be downloaded automatically by our vagrantfiles. Suppose you were looking at the LSDTopoTools_ChiMudd2014 package. In our Linux vagrant system, the path to this would be /LSDTopoTools/Git_projects/LSDTopoTools_ChiMudd2014.

If you type:

$ cd /LSDTopoTools/Git_projects/LSDTopoTools_ChiMudd2014

& lsYou will see a large number of files ending with .cpp and .hpp. These are files containing the instructions for computation. But they are not the program! You need to translate these files into something the computer can understand and for this you use a compiler. But there is another layer because we use lots of files so we need to compile lots of files, so we use something called a makefile which is a set of instructions about what bits of source code to mash together to make a program.

The *makefiles are in a folder called driver_functions_MuddChi2014. All of our packages have a directory with some variation of driver in the name. You need to go into this folder to get to the makefiles. You can see them with:

$ cd /LSDTopoTools/Git_projects/LSDTopoTools_ChiMudd2014/driver_functions_MuddChi2014

$ ls *.makeThis will list a bunch of makefiles. By running the command make -f and then the name of a makefile you will compile a program. The -f just tells make that you are using a makefile with a specific name and not a file called make.

Calling make with a makefile results in a program, that has the extension .out or .exe. The extension doesn’t really matter. We could have told the makefile to give the program the extension .hibswinthecup and it should still work. BUT it will only work with the operating system within which it was compiled: in this case the Ubuntu system that vagrant set up.

The crucial thing to realise here is that the program is located in the driver_functions_MuddChi2014 directory. If you want to run this program you need to be in this directory. In Linux you can check what directory you are in by typing pwd.

2.5.4. Know where your data is

When you run a makefile it will create a program sitting in some directory. Your data, if you are being clean and organised, will sit somewhere else.

| You need to tell the programs where the data is! People raised on smartphones and tablets seem to struggle with this. In many labratory sessions I have the computational equivalent of this converation: Student "I can’t get into my new apartment. Can you help?" Me: "Where did you put your keys?" Student: "I don’t know." Please don’t be that student. |

Most of our programs need to look for another file, sometimes called a driver file and sometimes called a parameter file. We probably should use a consistent naming convention for these files but I’m afraid you will need to live with our sloppiness. You get what you pay for, after all.

The programs will be in your source code folders, so for example, you might have a program called get_chi_profiles.exe in the /LSDTopoTools/Git_projects/LSDTopoTools_ChiMudd2014/driver_functions_MuddChi2014 directory. You then have to tell this program where the driver or parameter file is:

$ pwd

/LSDTopoTools/Git_projects/LSDTopoTools_ChiMudd2014/driver_functions_MuddChi2014

$ ./git_get_profiles.exe /LSDTopoTools/Topographic_projects/Test_data/ Example.driverIn the above examples, ./git_get_profiles.exe is calling the program.

/LSDTopoTools/Topographic_projects/Test_data/ is the folder where the driver/parameter file is. We tend to keep the topographic data and parameter files together. The final / is important: some of our programs will check for it but others won’t (sorry) and they will not run properly without it.

Example.driver is the filename of the driver/parameter file.

In the above example it means that the parameter file will be in the folder /LSDTopoTools/Topographic_projects/Test_data/ even though your program is in a different folder (/LSDTopoTools/Git_projects/LSDTopoTools_ChiMudd2014/driver_functions_MuddChi2014/).

2.6. Summary

This chapter has given an overview of what software is necessary to use LSDTopoTools. The appendices contain information about installing this software on both Windows, Linux, and MacOS operating systems, but these are only for stubborn people who like to do everything by hand. If you want to just get things working, use our vagrantfiles.

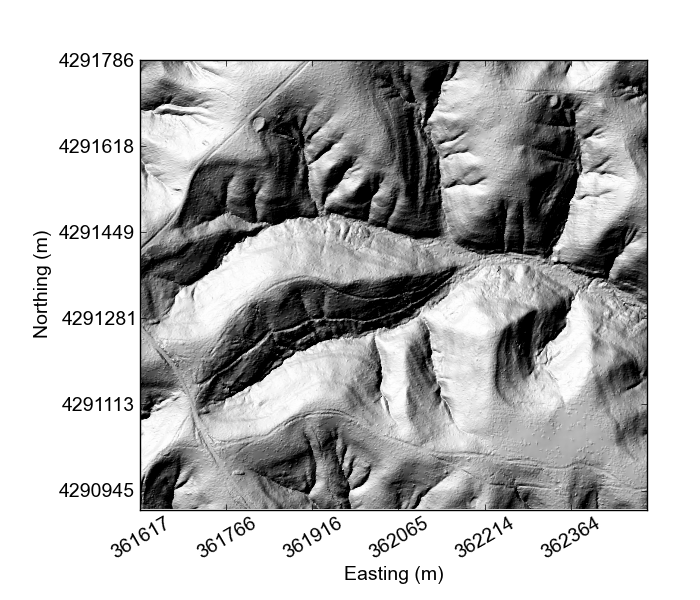

3. Preparing your data

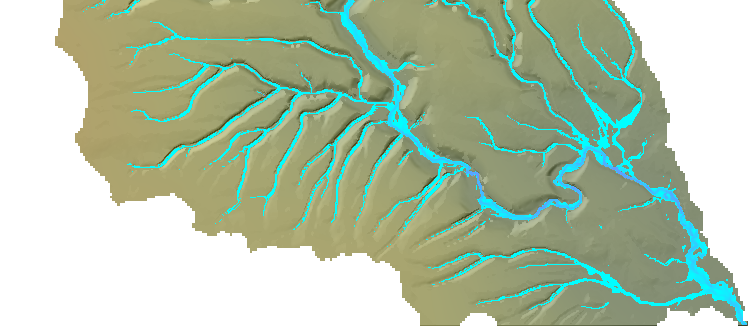

In this section we go over some of the steps required before you use the LSDTopoTools software package. The most basic step is to get some topographic data! Topographic data comes in a number of formats, so it is often necessary to manipulate the data a bit to get it into a form LSDTopoTools will understand. The main ways in which you will need to manipulate the data are changing the projection of the data and changing its format. We explain raster formats and projections first, and then move on to the tool that is best suited for projecting and transforming rasters: GDAL. Finally we describe some tools that you can use to lave a look at your raster data before you send it to LSDTopoTools.

3.1. The terminal and powershells

Our software works primarily through a terminal (in Linux) or powershell (in Windows) window. We don’t have installation notes for OSX but we recommend if you are on MacOS that you use the vagrant setup, which means you will have a nice little Linux server running inside your MacOS machine, and can follow the Linux instructions. A terminal or powershell window is an interface through which you can issue text-based commands to your computer.

In Windows, you can get powershell by searching for programs. If you are on Windows 8 (why are you on Windows 8??), use the internet to figure out how to get a powershell open.

Different flavors of Linux have different methods in which to open a terminal, but if you are using Ubuntu you can type Ctrl+Alt+T or you can find it in the application menu.

On other flavors of Linux (for example, those using a Gnome or KDE desktop) you can often get the terminal window by right-clicking anywhere on the desktop and selecting terminal option.

In KDE a terminal is also called a "Konsole".

Once you have opened a terminal window you will see a command prompt. In Linux the command prompt will look a bit like this:

user@server $or just:

$whereas the powershell will look a bit like this:

PS C:\Home >Once you start working with our tools you will quickly be able to open a terminal window (or powershell) in your sleep.

3.2. Topographic data

Topographic data comes in a number of formats, but at a basic level most topographic data is in the form of a raster. A raster is just a grid of data, where each cell in the grid has some value (or values). The cells are sometimes also called pixels. With image data, each pixel in the raster might have several values, such as the value of red, green and blue hues. Image data thus has bands: each band is the information pertaining to the different colors.

Topographic data, on the other hand, is almost always single band: each pixel or cell only has one data value: the elevation. Derivative topographic data, such a slope or aspect, also tends to be in single band rasters.

It is possible to get topographic data that is not in raster format (that is, the data is not based on a grid). Occasionally you find topographic data built on unstructured grids, or point clouds, where each elevation data point has a location in space associated with it. This data format takes up more space than raster data, since on a aster you only need to supply the elevation data: the horizontal positions are determined by where the data sits in the grid. Frequently LiDAR data (LiDAR stands for Light Detection and Ranging, and is a method for obtaining very high resolution topographic data) is delivered as a point cloud and you need software to convert the point cloud to a raster.

For most of this book, we will assume that your data is in raster format.

3.3. Data sources

Before you can start analyzing topography and working with topographic data, you will need to get data and then get it into the correct format. This page explains how to do so.

3.3.1. What data does LSDTopoToolbox take?

The LSDTopoToolbox works predominantly with raster data; if you don’t know what that is you can read about it here: http://en.wikipedia.org/wiki/Raster_data. In most cases, the raster data you will start with is a digital elevation model (DEM). Digital elevation models (and rasters in general) come in all sorts of formats. LSDTopoToolbox works with three formats:

| Data type | file extension | Description |

|---|---|---|

|

This format is in plain text and can be read by a text editor. The advantage of this format is that you can easily look at the data, but the disadvantage is that the file size is extremely large (compared to the other formats, .flt). |

|

Float |

|

This is a binary file format meaning that you can’t use a text editor to look at the data.

The file size is greatly reduced compared to |

|

This is the recommended format, because it works best with GDAL (see the section GDAL), and because it retains georeferencing information. |

Below you will find instructions on how to get data into the correct format: data is delivered in a wide array of formats (e.g., ESRI bil, DEM, GeoTiff) and you must convert this data before it can be used by LSDTopoTools.

3.3.2. Downloading data

If you want to analyze topography, you should get some topographic data! The last decade has seen incredible gains in the availability and resolution of topographic data. Today, you can get topographic data from a number of sources. The best way to find this data is through search engines, but below are some common sources:

| Source | Data type | Description and link |

|---|---|---|

LiDAR |

Lidar raster and point cloud data, funded by the National Science foundation. http://www.opentopography.org/ |

|

LiDAR and IfSAR |

Lidar raster and point cloud data, and IFSAR (5 m resolution or better), collated by NOAA. http://www.csc.noaa.gov/inventory/# |

|

Various (including IfSAR and LiDAR, and satellite imagery) |

United States elevation data hosted by the United States Geological Survey. Mostly data from the United States. http://viewer.nationalmap.gov/basic/ |

|

Various (including LiDAR, IfSAR, ASTER and SRTM data) |

Another USGS data page. THis has more global coverage and is a good place to download SRTM 30 mdata. http://earthexplorer.usgs.gov/ |

|

LiDAR |

This site has lidar data from Spain: http://centrodedescargas.cnig.es/CentroDescargas/buscadorCatalogo.do?codFamilia=LIDAR |

|

LiDAR |

Finland’s national LiDAR dataset: https://tiedostopalvelu.maanmittauslaitos.fi/tp/kartta?lang=en |

|

LiDAR |

Denmark’s national LiDAR dataset: http://download.kortforsyningen.dk/ |

|

LiDAR |

LiDAR holdings of the Environment Agency (UK): http://www.geostore.com/environment-agency/WebStore?xml=environment-agency/xml/application.xml |

|

LiDAR |

Lidar from the Trentio, a province in the Italian Alps: http://www.lidar.provincia.tn.it:8081/WebGisIT/pages/webgis.faces |

3.4. Projections and transformations

Many of our readers will be aware that our planet is well approximated as a sphere. Most maps and computer screens, however, are flat. This causes some problems.

To locate oneself on the surface of the Earth, many navigational tools use a coordinate system based on a sphere, first introduced by the "father or geography" Eratosthenes of Cyrene. Readers will be familiar with this system through latitude and longitude.

A coordinate system based on a sphere is called a geographic coordinate system. For most of our topographic analysis routines, a geographic coordinate system is a bit of a problem because the distance between points is measured in angular units and these vary as a function of position on the surface of the planet. For example, a degree of longitude is equal to 111.320 kilometers at the equator, but only 28.902 kilometers at a latitude of 75 degrees! For our topographic analyses tools we prefer to measure distances in length rather than in angular units.

To convert locations on a the surface of a sphere to locations on a plane (e.g., a paper map or your computer screen), a map projection is required. All of the LSDTopoTools analysis routines work on a projected coordinate system.

There are many projected coordinate systems out there, but we recommend the Universal Transverse Mercator (UTM) system, since it is a widely used projection system with units of meters.

So, before you do anything with topographic data you will need to:

-

Check to see if the data is in a projected coordinate system

-

Convert any data in a geographic coordinate systems to a projected coordinate system.

Both of these tasks can be done quickly an easily with GDAL software tools.

3.5. GDAL

| If you installed our software using vagrant this will be installed automatically. Instructions are here: Installing LSDTopoTools using VirtualBox and Vagrant. |

Now that you know something about data formats, projections and transformations (since you read very carefully the preceding sections), you are probably hoping that there is a simple tool with which you can manipulate your data. Good news: there is! If you are reading this book you have almost certainly heard of GIS software, which is inviting since many GIS software packages have a nice, friendly and shiny user interface that you can use to reassuringly click on buttons. However, we do not recommend that you use GIS software to transform or project your data. Instead we recommend you use GDAL.

GDAL (the Geospatial Data Abstraction Library) is a popular software package for manipulating geospatial data. GDAL allows for manipulation of geospatial data in the Linux operating system, and for most operations is much faster than GUI-based GIS systems (e.g., ArcMap).

Here we give some notes on common operations in GDAL that one might use when working with LSDTopoTools. Much of these operations are carried out using GDAL’s utility programs, which can be downloaded from http://www.gdal.org/gdal_utilities.html. The appendices have instructions on how to get the GDAL utilities working. You will also have to be able to open a terminal or powershell. Instructions on how to do this are in the appendices.

3.5.1. Finding out what sort of data you’ve got

One of the most frequent operations in GDAL is just to see what sort of

data you have. The tool for doing this is gdalinfo which is run with

the command line:

$ gdalinfo filename.extwhere filename.ext is the name of your raster.

This is used mostly to:

-

See what projection your raster is in.

-

Check the extent of the raster.

This utility can read Arc formatted rasters but you need to navigate

into the folder of the raster and use the .adf file as the filename.

There are sometimes more than one .adf files so you’ll just need to

use ls -l to find the biggest one.

3.5.2. Translating your raster into something that can be used by LSDTopoToolbox

Say you have a raster but it is in the wrong format (LSDTopoToolbox at

the moment only takes .bil, .flt and .asc files) and in the wrong

projection.

| LDSTopoToolbox performs many of its analyses on the basis of projected coordinates. |

You will need to be able to both change the projection of your rasters and change the format of your raster. The two utilities for this are:

Changing raster projections with gdalwarp

The preferred coordinate system is WGS84 UTM coordinates. For convert to

this coordinate system you use gdalwarp. The coordinate system of the

source raster can be detected by gdal, so you use the flag -t_srs to

assign the target coordinate system. Details about the target coordinate

system are in quotes, you want:

+proj=utm +zone=XX +datum=WGS84'where XX is the UTM zone.

You can find a map of UTM zones here: http://www.dmap.co.uk/utmworld.htm. For

example, if you want zone 44 (where the headwaters of the Ganges are),

you would use:

'+proj=utm +zone=XX +datum=WGS84'Put this together with a source and target filename:

$ gdalwarp -t_srs '+proj=utm +zone=XX +datum=WGS84' source.ext target.extso one example would be:

$ gdalwarp -t_srs '+proj=utm +zone=44 +datum=WGS84' diff0715_0612_clip.tif diff0715_0612_clip_UTM44.tifnote that if you are using UTM and you are in the southern hemisphere,

you should use the +south flag:

$ gdalwarp -t_srs '+proj=utm +zone=19 +south +datum=WGS84' 20131228_tsx_20131228_tdx.height.gc.tif Chile_small.tifThere are several other flags that could be quite handy (for a complete list see the GDAL website).

-

-offormat: This sets the format to the selected format. This means you can skip the step of changing formats withgdal_translate. We will repeat this later but the formats forLSDTopoToolsare:Table 4. Format of outputs for GDAL Flag Description ASCGridASCII files. These files are huge so try not to use them.

EHdrESRI float files. This used to be the only binary option but GDAL seems to struggle with it and it doesn’t retain georeferencing.

ENVIENVI rasters. This is the preferred format. GDAL deals with these files well and they retain georeferencing. We use the extension

bilwith these files.So, for example, you could output the file as:

$ gdalwarp -t_srs '+proj=utm +zone=44 +datum=WGS84' -of ENVI diff0715_0612_clip.tif diff0715_0612_clip_UTM44.bilOr for the southern hemisphere:

$ gdalwarp -t_srs '+proj=utm +zone=19 +south +datum=WGS84' -of ENVI 20131228_tsx_20131228_tdx.height.gc.tif Chile_small.bil -

-trxres yres: This sets the x and y resolution of the output DEM. It uses nearest neighbour resampling by default. So say you wanted to resample to 4 metres:$ gdalwarp -t_srs '+proj=utm +zone=44 +datum=WGS84' -tr 4 4 diff0715_0612_clip.tif diff0715_0612_clip_UTM44_rs4.tifLSDRasters assume square cells so you need both x any y distances to be the same -

-rresampling_method: This allows you to select the resampling method. The options are:Table 5. Resampling methods for GDAL Method Description nearNearest neighbour resampling (default, fastest algorithm, worst interpolation quality).

bilinearBilinear resampling.

cubicCubic resampling.

cubicsplineCubic spline resampling.

lanczosLanczos windowed sinc resampling.

averageAverage resampling, computes the average of all non-NODATA contributing pixels. (GDAL versions >= 1.10.0).

modeMode resampling, selects the value which appears most often of all the sampled points. (GDAL versions >= 1.10.0).

So for example you could do a cubic resampling with:

$ gdalwarp -t_srs '+proj=utm +zone=44 +datum=WGS84' -tr 4 4 -r cubic diff0715_0612_clip.tif diff0715_0612_clip_UTM44_rs4.tif -

-te<x_min> <y_min> <x_max> <y_max>: this clips the raster. You can see more about this below in under the header Clipping rasters with gdal.-

UTM South: If you are looking at maps in the southern hemisphere, you need to use the

+southflag:$ gdalwarp -t_srs '+proj=utm +zone=44 +south +datum=WGS84' -of ENVI diff0715_0612_clip.tif diff0715_0612_clip_UTM44.bil

-

Changing Nodata with gdalwarp

Sometimes your source data has nodata values that are weird, like 3.08x10^36 or something. You might want to change these values in your output DEM. You can do this with gdalwarp:

$ gdalwarp -of ENVI -dstnodata -9999 harring_dem1.tif Harring_DEM.bilIn the above case I’ve just changed a DEM from a tif to an ENVI bil but used -9999 as the nodata value.

Changing raster format with gdal_translate

Suppose you have a raster in UTM coordinates

(zones can be found here: http://www.dmap.co.uk/utmworld.htm) but

it is not in .flt format. You can change the format using

gdal_translate (note the underscore).

gdal_translate recognizes many file formats, but for LSDTopoTools you want either:

-

The ESRI .hdr labelled format, which is denoted with

EHdr. -

The ENVI .hdr labelled format, which is denoted with

ENVI. ENVI files are preferred since they work better with GDAL and retain georeferencing.

To set the file format you use the -of flag, an example would be:

$ gdal_translate -of ENVI diff0715_0612_clip_UTM44.tif diff0715_0612_clip_UTM44.bilWhere the first filename.ext is the source file and the second is the

output file.

Nodata doesn’t register

In older versions of GDAL, the NoDATA value doesn’t translate when you use gdalwarp and gdal_traslate.

If this happens to you, the simple solution is to go into the 'hdr' file and add the no data vale.

You will need to use gdalinfo to get the nodata value from the source raster, and then in the header of the destination raster,

add the line: data ignore value = -9999 (or whatever the nodata value in the source code is).

|

If you want to change the actual nodata value on an output DEM, you will need to use gdalwarp with the -dstnodata flag.

Potential filename errors

It appears that GDAL considers filenames to be case-insensitive, which can cause data management problems in some cases. The following files are both considered the same:

Feather_DEM.bil feather_dem.bilThis can result in an ESRI *.hdr file overwriting an ENVI *.hdr file

and causing the code to fail to load the data. To avoid this ensure that

input and output filenames from GDAL processes are unique.

3.5.3. Clipping rasters with gdal

You might also want to clip your raster to a smaller area. This can

sometimes take ages on GUI-based GISs. An alternative is to use

gdalwarp for clipping:

$ gdalwarp -te <x_min> <y_min> <x_max> <y_max> input.tif clipped_output.tifor you can change the output format:

$ gdalwarp -te <x_min> <y_min> <x_max> <y_max> -of ENVI input.tif clipped_output.bilSince this is a gdalwarp operation, you can add all the bells and

whistles to this, such as:

-

changing the coordinate system,

-

resampling the DEM,

-

changing the file format.

The main thing to note about the -te operation is that the clip will

be in the coordinates of the source raster (input.tif). You can look

at the extent of the raster using gdalinfo.

3.5.4. Merging large numbers of rasters

Often websites serving topographic data will supply it to you in tiles. Merging these rasters can be a bit of an annoyance if you use a GIS, but is a breeze with GDAL. The gdal_merge.py program allows you to feed in a file with the names of the rasters you want to merge, so you can use a Linux pipe to merge all of the rasters of a certain format using just two commands:

$ ls *.asc > DEMs.txt

$ gdal_merge.py -of ENVI -o merged_dem.bil --optfile DEMs.txtThe above command works for ascii files but tif or other file formats would work as well.

The exception to this is ESRI files, which have a somewhat bizarre structure which requires a bit of extra work to get the file list. Here is an example python script to process tiles that have a common directory name:

def GetESRIFileNamesNextMap():

file_list = []

for DirName in glob("*/"):

#print DirName

directory_without_slash = DirName[:-1]

this_filename = "./"+DirName+directory_without_slash+"dtme/hdr.adf\n"

print this_filename

file_list.append(this_filename)

# write the new version of the file

file_for_output = open("DEM_list.txt",'w')

file_for_output.writelines(file_list)

file_for_output.close()If you use this you will need to modify the directory structure to reflect your own files.

3.6. Looking at your data (before you do anything with it).

You might want to have a look at your data before you do some number crunching. To look at the data, there are a number of options. The most common way of looking at topographic data is by using a Geographic Information System (or GIS). The most popular commercial GIS is ArcGIS. Viable open source alternatives are QGIS is you want something similar to ArcGIS, and Whitebox if you want something quite lightweight.

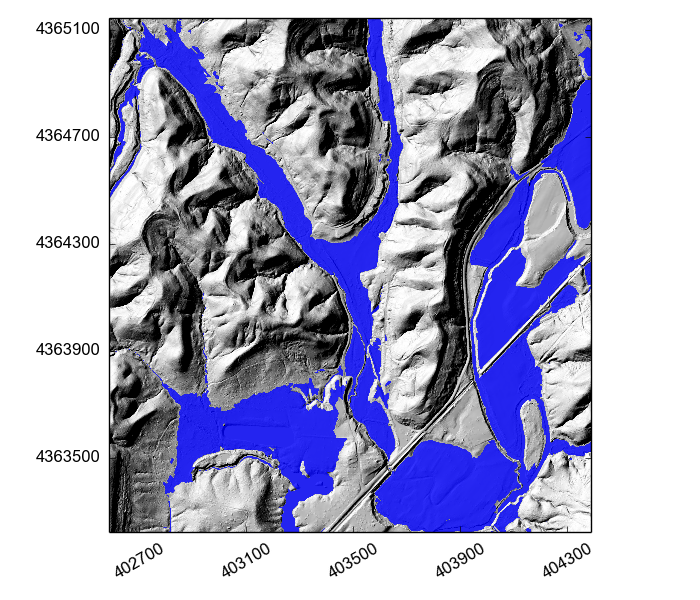

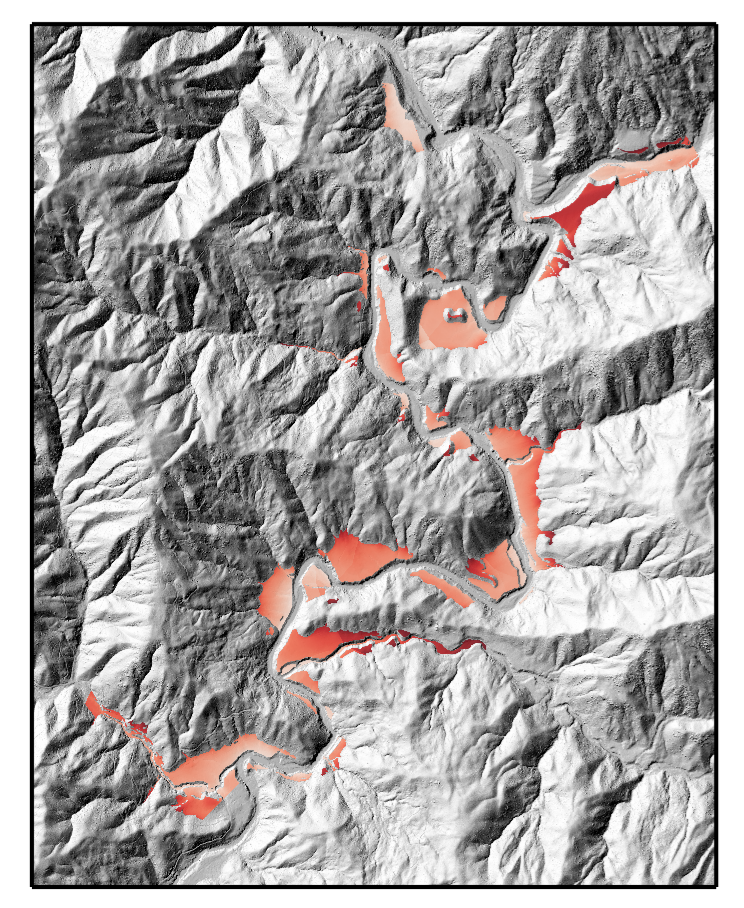

3.6.1. Our lightweight python mapping tools

If you would like something really lightweight, you can use our python mapping tools,

available here: https://github.com/LSDtopotools/LSDMappingTools.

These have been designed for internal use for our group, so at this point they aren’t well documented.

However if you know a bit of python you should be able to get them running.

You will need python with numpy and matplotlib.

To look at a DEM,

you will need to download LSDMappingTools.py and TestMappingTools.py from the Gitub repository.

The latter program just gives some examples of usage.

At this point all the plotting functions do are plot the DEM and plot a hillshade,

but if you have python working properly you can plot something in a minute or two rather than having to set up a GIS.

3.7. NoData problems

Often digitla elevation models have problems with nodata. The nodata values don’t register properly, or there are holes in your DEM, or the oceans and seas are all at zero elevation which messes up any analyses. This happens frequently enough that we wrote a program for that. Instructions are <<,here>>

3.8. Summary

You should now have some idea as to how to get your hands on some topographic data, and how to use GDAL to transform it into something that LSDTopoTools can use.

4. Getting LSDTopoTools

There are several ways to get our tools, but before you start downloading code, you should be aware of how the code is structured. Much of this chapter covers the details about what you will find when you download the code and how to download it with git, but if you just want to get started you can always skip to the final section.

| This section covers the way you get individual packages from our repository, but if you follow the instructions on Installing LSDTopoTools using VirtualBox and Vagrant then the most commonly used packages will be downloaded automatically. If you use our Vagrant setup you can |

4.1. How the code is structured

Okay, if you are getting the LSDTopoTools for the first time, it will be useful to understand how the code is structured. Knowing the structure of the code will help you compile it (that is, turn the source code into a program). If you just want to grab the code, skip ahead to the section Getting the code using Git. If, on the other hand, you want to know the intimate details of the code structured, see the appendix: Code Structure.

4.1.1. Compiling the code

The software is delivered as C++ source code. Before you can run any analyses, you need to compile it, using something called a compiler. You can think of a compiler as a translator, that translates the source code (which looks very vaguely like English) into something your computer can understand, that is in the form of 1s and 0s.

The C++ source code has the extensions .cpp and .hpp.

In addition, there are files with the extension .make,

which give instructions to the compiler through the utility make.

Don’t worry if this all sounds a bit complex. In practice you just need to run make (we will explain how to do that)

and the code will compile, leaving you with a program that you can run on your computer.

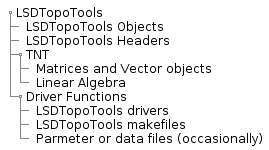

4.1.2. Driver functions, objects and libraries

LSDTopoTools consists of three distinct components:

-

Driver functions: These are programs that are used to run the analyses. They take in topographic data and spit out derivative data sets.

-

Objects: The actual number crunching goes on inside objects. Unless you are interested in creating new analyses or are part of the development team, you won’t need to worry about objects.

-

Libraries: Some of the software need separate libraries to work. The main one is the TNT library that handles some of the computational tasks. Unless otherwise stated, these are downloaded with the software and you should not need to do anything special to get them to work.

When you download the code, the objects will sit in a root directory.

Within this directory there will be driver_function_* directories as well as a TNT directory.

Driver functions

If you are using LSDTopoTools simply to produce derivative datasets from your topographic data, the programs you will use are driver functions. When compiled these form self contained analysis tools. Usually they are run by calling parameter files that point to the dataset you want to analyze, and the parameters you want to use in the analysis.

The .make files, which have the instructions for how the code should compile, are located in the driver_functions_* folders.

For example, you might have a driver function folder called /home/LSDTopoTools/driver_functions_chi,

and it contains the following files:

$ pwd

/home/LSDTopoTools/driver_functions_chi

$ ls

chi_get_profiles_driver.cpp chi_step1_write_junctions_driver.cpp

chi_get_profiles.make chi_step1_write_junctions.make

chi_m_over_n_analysis_driver.cpp chi_step2_write_channel_file_driver.cpp

chi_m_over_n_analysis.make chi_step2_write_channel_file.makeIn this case the .make files are used to compile the code, and the .cpp files are the actual instructions for the analyses.

Objects

LSDTopoTools contains a number of methods to process topographic data, and these methods live within objects. The objects are entities that store and manipulate data. Most users will only be exposed to the driver functions, but if you want to create your own analyses you might have a look at the objects.

The objects sit in the directory below the driver functions. They all have names starting with LSD,

so, for examples, there are objects called LSDRaster, LSDFlowInfo, LSDChannel and so on.

Each object has both a .cpp and a .hpp file.

If you want the details of what is in the objects in excruciating detail, you can go to our automatically generated documentation pages, located here: http://www.geos.ed.ac.uk/~s0675405/LSD_Docs/index.html.

Libraries

The objects in LSDTopoTools required something called the Template Numerical Toolkit, which handles the rasters and does some computation. It comes with the LSDTopoTools package. You will see it in a subfolder within the folder containing the objects. This library compiled along with the code using instructions from the makefile. That is, you don’t need to do anything special to get it to compile or install.

There are some other libraries that are a bit more complex which are used by certain LSDTopoTools packages, but we will explain those in later chapters when we cover the tools that use them.

4.1.3. The typical directory layout

4.2. Getting the code using Git

The development versions of LSDTopoTools live at the University of Edinburgh’s code development pages, sourceEd, and if you want to be voyeuristic you can always go to the timeline there and see exactly what we are up to.

If you actually want to download working versions of the code, however, your best bet is to go to one of our open-source working versions hosted on Github.

To get code on Github you will need to know about the version control system git.

What follows is an extremely abbreviated introduction to git.

If you want to know more about it, there are thousands of pages of documentation waiting for you online.

Here we only supply the basics.

4.2.1. Getting started with Git

We start with the assumption that you have installed git on your computer.

If it isn’t installed, you should consult the appendices for instructions on how to install it.

You can call git with:

$ git| Much of what I will describe below is also described in the Git book, available online. |

If it is your first time using git, you should configure it with a username and email:

$ git config --global user.name "John Doe"

$ git config --global user.email johndoe@example.comNow, if you are the kind of person who cares what the internet thinks of you, you might want to set your email and username to be the same as on your Github account (this is easily done online) so that your contributions to open source projects will be documented online.

You can config some other stuff as well, if you feel like it, such as your editor and merge tool.

If you don’t know what those are, don’t bother with these config options:

$ git config --global merge.tool vimdiff

$ git config --global core.editor emacs

If you want a local configuration, you need to be in a repository (see below) and use the --local instead of --global flag.

|

- You can check all your options with

$ git config --list

core.repositoryformatversion=0

core.filemode=true

core.bare=false

core.logallrefupdates=true

core.editor=emacs

user.name=simon.m.mudd

user.email=Mudd.Pile@pileofmudd.mudd

merge.tool=vimdiff4.2.2. Pulling a repository from Github

Okay, once you have set up git, you are ready to get some code!

To get the code, you will need to clone it from a repository.

Most of our code is hosted on Github, and the repository https://github.com/LSDtopotools,

but for now we will run you through an example.

First, navigate to a folder where you want to keep your repositories. You do not need to make a subfolder for the specific repository; git will do that for you.

Go to Github and navigate to a repository you want to grab (in git parlance, you will clone the repository).

Here is one that you might try: https://github.com/LSDtopotools/LSDTopoTools_ChiMudd2014.

If you look at the right side of this website there will be a little box that says HTTPS clone URL.

Copy the contents of this box. In your powershell or terminal window type

$ git clone https://github.com/LSDtopotools/LSDTopoTools_ChiMudd2014.gitThe repository will be cloned into the subdirectory LSDTopoTools_ChiMudd2014.

Congratulations, you just got the code!

Keeping the code up to date

Once you have the code, you might want to keep up with updates. To do this, you just go to the directory that contains the repository whenever you start working and run

$ git pull -u origin masterThe origin is the place you cloned the repository from (in this case a specific Github repository) and

master is the branch of the code you are working on.

Most of the time you will be using the master branch,

but you should read the git documentation to find out

how to branch your repository.

Keeping track of changes

Once you have an updated version of the code you can simply run it to do your own analyses.

But if you are making modification to the code, you probably will want to track these changes.

To track changes use the git commit command.

If you change multiple files, you can commit everything in a folder (including all subdirectories) like this:

$ git commit -m "This is a message that should state what you've just done." .Or you can commit individual, or multiple files:

$ git commit -m "This is a message that should state what you've just done." a.file

$ git commit -m "This is a message that should state what you've just done." more.than one.file4.2.3. Making your own repository

If you start to modify our code, or want to start keeping track of your own scripts, you might create your own repositories using git and host them on Github, Bitbucket or some other hosting website.

First, you go to a directory where you have some files you want to track. You will need to initiate a git repository here. This assumes you have git installed. Type:

git initto initiate a repository.

If you are downloading an LSDTopoTools repository from github, you won’t need to init a repository.

So now you gave run git init in some folder to initiate a repository.

You will now want to add files with the add command:

$ ls

a.file a_directory

$ git add a.file a_directoryGit adds all files in a folder, including all the files in a named subdirectoy.

If you want to add a specific file(s), you can do something like this:

$ git add *.hpp

$ git add A_specific.fileCommitting to a repository

Once you have some files in a repository,

$ git commit -m "Initial project version" .Where the . indicates you want everything in the current directory including subfolders.

Pushing your repository to Github

Github is a resource that hosts git repositories. It is a popular place to put open source code. To host a repository on Github, you will need to set up the repository before syncing your local repository with the github repository. Once you have initiated a repository on Github, it will helpfully tell you the URL of the repository. This URL will look something like this: https://github.com/username/A_repository.git.

To place the repository sitting on your computer on Github,

you need to use the push command. For example:

$ git remote add origin https://github.com/simon-m-mudd/OneD_hillslope.git

$ git push -u origin master